We recently cleared up some of the common misunderstandings people have about Kubernetes as they start experimenting with it. One of the biggest misunderstandings, though, deserves its own story: Running Kubernetes in production is pretty much the same as running Kubernetes in a dev or test environment.

Hint: It’s not.

“When it comes to Kubernetes, and containers and microservices in general, there’s a big gap between what it takes to run in the ‘lab’ and what it takes to run in full production,” says Ranga Rajagopalan, cofounder and CTO of Avi Networks. “It’s the difference between simply running, and running securely and reliably.”

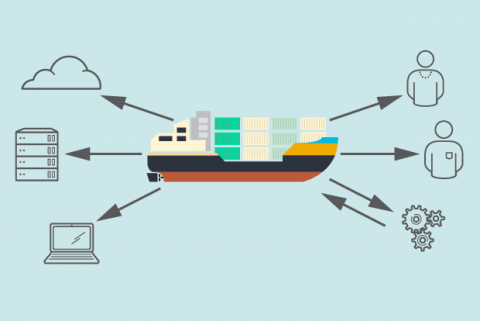

There’s an important starting point in Rajagopalan’s comment: This isn’t just a Kubernetes issue, per se, but rather more widely applicable to containers and microservices. It is relatively “easy” to deploy a container; operating and scaling containers (and containerized microservices) in production is what introduces complexity.

That means container orchestration usually follows suit. Research conducted previously by The New Stack, for example, found container adoption to in turn fuel Kubernetes adoption as organizations look to this powerful technology to solve their operational challenges. While there are other options, Kubernetes has quickly become synonymous with orchestration and a go-to choice. And as Rajagopalan notes, there can be an outsized gap between running this it in a sandbox environment compared with production use.

[ Want to help others understand Kubernetes? Check out our related article, How to explain Kubernetes in plain English. ]

As more IT pros and teams tinker with containers and Kubernetes on a smaller scale, they can be in for a steep learning curve when “tinkering locally” shifts to “deploying to production.” You’ll want to clarify some common misunderstandings before that shift occurs: Here’s what IT leaders and their teams should know ahead of time.

Myth #1: Running Kubernetes in dev/test gave you a firm handle on your operational needs

Reality: Running Kubernetes in dev or test environments enables you to take some shortcuts, or simply not have to deal with some of the operational load that comes with running in production.

“The operations and security angles are the biggest differences between Kubernetes in dev/test versus in production,” says Wei Lien Dang, VP of products at StackRox. “In dev/test, if a cluster goes down, you don’t care.”

Murli Thirumale, co-founder and CEO at Portworx, characterizes the differences between dev/test and production as parallel to the difference between agility and agility paired with reliability and performance. Both execs note that the latter combo requires some work.

“The goals of the dev team for containers are app agility in developing and testing new apps and new code,” Thirumale says. “Meanwhile, the goals of IT Ops are reliability, scale, and performance of apps and data, and security. The latter needs a robust, enterprise-class, tried-and-true platform.”

Automation becomes a more critical requirement for running Kubernetes (and containers generally) in production.

“Your production clusters must be installed using some automation,” says Ranjan Bhagirathan, technical architect at Coda Global. “It has to be repeatable to guarantee consistency and also helps with recovery when needed.”

Versioning is also crucial for production operations, according to Bhagirathan. “Version control everything, such as your service deployment configurations, policies, etc. – including your infrastructure via infrastructure-as-code where possible,” Bhagirathan says. “This ensures your environments are similar. Also, be sure to version control your container images, and don’t just use the ‘latest’ tag to deploy across environments, which is an easy way to introduce drift.”

Myth #2: You’ve got reliability and security all figured out

Reality: You probably don’t, at least not yet, if you’ve only been piloting Kubernetes in a non-production environment.

The good news is that you’ll get there. It’s just a matter of planning and architecture before you go to production.

“Obviously, in production environments, the stakes are higher in terms of performance, scalability, high availability, and security,” says Amir Jerbi, CTO and cofounder at Aqua Security. “It’s important to plan for production requirements at the architecture phase, and build security and scaling controls into K8s deployment definitions, Helm charts, etc.”

Dang shares an example of how dev/test experimentation might lead to some overconfidence.

“If all the network connections are open in dev/test, that’s fine, and probably desirable to make sure every service can reach every other service. Open connections are the default setting in Kubernetes,” Dang says. This isn’t usually a tenable production strategy, though: “Once you move into production, downtime and the larger attack surface present much greater business risks.”

There’s non-trivial work involved in building a highly reliable, highly available system when shifting to containers and microservices; orchestration is what helps you do that work, but it’s not a sorcerer’s wand. The same goes for security.

“Locking down Kubernetes to limit the attack surface also takes a lot of work,” Dang says. “It’s critical to move to a least-privilege model with network policy enforcement, limiting the communications paths to only those services needed.”

Container image vulnerabilities can quickly become critical in production, for example, whereas the threat may be limited or non-existent locally.

“Pay attention to what base images are used for building your containers. Use trusted official images wherever possible or roll your own,” Bhagirathan says. “It might be easier to quickly get things working locally using unknown images, but that can also be a security risk. You don’t want your Kubernetes clusters contributing to someone else’s Bitcoin mining efforts, for example.”

Red Hat security strategist Kirsten Newcomer encourages people to think of container security as having ten layers – including both the container stack layers (such as the container host and registries) and container lifecycle issues (such as API management). For complete details on the ten layers and how orchestration tools such as Kubernetes fit in, check out this podcast with Newcomer, or this whitepaper: Ten Layers of Container Security.