Digital data has been around for centuries in one form or the other. Commercial tabulating machines have been available since the late 1800’s when they were used for accounting, inventory and population census. Why then do we label today as the Big Data age? What dramatically changed in the last 10-15 years that has the entire IT industry chomping at the bit?

More data? Certainly. But that’s only the tip of the iceberg. There are two big drivers that have contributed to the classic V’s (Volume, Variety, Velocity) of Big Data. The first is the commoditization of computing hardware – servers, storage, sensors, cell phones – basically anything that runs on silicon. The second is the explosion in the number of data authors – both machines and humans.

Until around the turn of the century, only the “haves” (such as Exxon or Pfizer) could afford the exorbitant price tag of scale-up server farms needed to process oodles of geo spatial and genetics data in a meaningful time frame. The combination of hardware commoditization and scale-out parallel computing brought large scale data analysis within the reach of the “have nots”.

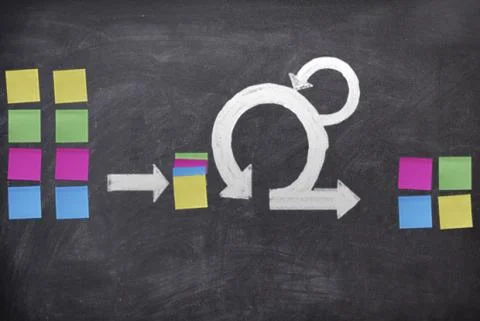

As a result, enterprises and governments looked to process even larger amounts and new types of data while enabling their constituents to run queries on their data that weren’t feasible before. This prompted a change in the life cycle of data from creation to deletion, that can be broadly classified under three categories:

I. Data

The first big change is the data itself. In the traditional life cycle, architects went through the laborious process of ensuring the data was complete and consistent before being loaded into the sacred data warehouse. In fact, many cycles (and careers) were spent on the ETL (Extract, Transform, & Load) processes to improve “data quality” and ensure a “single version of the truth”. These processes were typically batch oriented and only added to the staleness of the insights spewed out by the data warehouse into mining cubes and BI tools.

The new life cycle makes no such demands of its data. It’s quite happy working with incomplete, heterogeneous data that has not been minced and ground by a gatekeeper ETL process. The focus is on getting actionable insights to business users as soon as possible, by running analytics against large chunks of data repeatedly as the algorithms get smarter and data denser.

Apache Hadoop is a great example of a technology that enables this evolution by allowing data to be captured first and a schema to be created later, diametrically opposite to the old life cycle that required a schema to be set in stone before an ounce of data could be ingested.

II. Queries

As a result of getting access to large unrefined data sets, developers devised new programming frameworks & languages to separate signal from noise quickly and cost effectively. Rather than write “Select * from” queries, they now write open ended queries build on analytic models that are scored and re-scored iteratively until useful patterns emerge organically from the data that that can be turned into business insights.

For instance, cell phone providers use such open-ended queries to segment their customers by analyzing call detail records to create new phone plans and tweak existing ones to stem attrition and grow their subscriber base. Each cell phone call populates about 200 fields in a database record. Detecting known patterns of say, a customer concerned with dropped calls or international roaming, can help the provider proactively reach out to the customer before it’s too late.

Another change is that now queries don’t always come from humans. Many enterprises use visualization tools to enable their developers to ask the right questions that may not have been intuitive just by looking at the data, much like how a pie chart is easier to grasp than a table.

III. Users

Possibly the biggest change affecting the data life cycle is the nature of data stakeholders. Most business users in the past were satisfied with a small buffet of canned queries they would run at the end of the day, month, quarter or year.

In addition to traditional users, you now need to cater to tech savvy power users – many of whom have PhD’s in data science and statistics – who tend to write rogue queries that hog resources and degrade performance. To make matters worse, these power users don’t have set schedules like traditional users did, which adds to the workload optimization woes of a data center operator.

The new data life cycle sounds like all gloom and doom but it’s important to remember that the traditional life cycle isn’t going away in a hurry. You’ve spent time, effort and money to build your data environment so complement rather than reinvent. It’s important to keep in mind that while you build a real-time, secure, and cloud-ready data architecture, you also ensure it is open and scalable to grow with you as your data needs evolve.