Part Two of the two-part series on the FCC’s IT modernization and journey to the cloud. Read Part One.

All organizations are familiar with Murphy's Law – the idea that if something can go wrong, it inevitably will. It’s a mantra in IT circles and all the motivation needed to ensure IT organizations are prepared for all possible scenarios. It's likely this very idea was going through the mind of Dr. David Bray, CIO of the U.S. Federal Communications Commission, in the early hours of the morning on September 4, 2015, as he watched a truck with 200 servers and 400 terabytes of data driving away from the FCC – and nearly getting stuck in the driveway.

How did we get here? If you haven't read Part One, go here for background on Bray's ambitious IT overhaul – two years in the making – and plan to move all of the FCC's physical servers to an offsite commercial vendor. After retiring more than a third of their 300 different servers, resulting in a net “weight loss” of more than two tons in total, and successfully moving several key operations to the cloud, including FCC's website, Consumer Help Desk, and employee email, Bray's team began a 28-day countdown clock to keep “Operation Server Lift” on schedule. Bray walks us through the final week of the mission in Part Two.

Preparing for the move

"Monday, Aug. 24 is when we initiated a full storage area network (SAN) replication of all 400 terabytes of data that the FCC had," said Bray. "Our budget was so constrained we couldn’t do a mirrored replication of everything. We had to do a physical move. This meant we had to be careful because there were external applications where people could send and receive data. For certain ones, we had to stop accepting new information at the risk that it wouldn't be included in the back up. The reality is that 400 terabytes of data can't be backed up instantaneously. It was actually fully completed on Wednesday, Sept. 2."

The SAN replica and non-essential applications were physically shipped to a leased datacenter the very next day, and it wasn't until they arrived safely and were successfully powered back on that Bray's team began an orderly shutdown of the FCC's systems. This was one of their “just in case” safety valves. On Sept. 3, after spending the evening disconnecting hundreds of servers, securely wrapping and preparing racks for shipping, the first truck left the FCC at 10 p.m. and the last truck left eight hours later. All the trucks arrived at the data facility a couple hours later, and the 200 servers were carefully unpacked.

Having avoided Murphy’s Law twice already– once with the shipping of the FCC’s data, and again with the physical shipping of their servers to a commercial facility, both of which went off without hitch – it was the third time that proved the charm. That morning, the Friday before the Labor Day holiday weekend, the FCC team started reconnecting the servers at the commercial facility, and then quickly realized that the thousands of inter-server cables needed to connect the 200 servers did not match the FCC network topology provided to the commercial vendor.

Labor of love

"The good news was it wasn’t a hardware failure," Bray said. "Initially, we thought we’ve either had a massive hardware failure or the cabling was not correct – and the hardware failure would have been significantly worse. We did have backup hardware available as a 'safety valve,' just in case, but some of these systems were so old that we couldn’t get backup hardware."

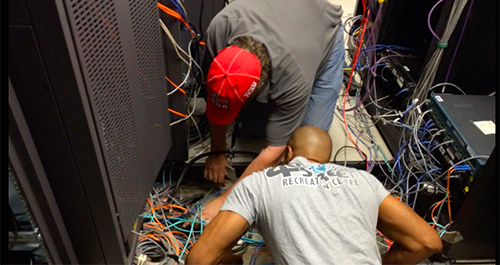

Although the cabling issue was the better of the two scenarios, it was still determined to be a significant issue since individually troubleshooting the cables in a sea of thousands would be akin to searching for needles in a haystack. Though the FCC had provided network topology scans and walkthroughs of their infrastructure, at least 50 percent of the thousands of cables weren’t matching what they needed, necessitating a complete replacement of all of them from scratch. Committing to rectifying this situation required FCC staff and contractors to give up their holiday weekend, work around the clock, day and night – 55 hours in total – to resolve the thousands of cables.

"This was unexpected and consumed all the time we had budgeted for surprises," said Bray. "To the entire team’s credit: new and seasoned personnel, multiple contractor and government professionals, everyone within the team rallied. And they did so without anyone having to ask. It was humbling to see that everyone was committed to making this right even if it meant working 24-hour shifts on Labor Day weekend and us purchasing all the Red Bull in the county. Because of the dedication of these hardworking individuals, 55 hours later, the inter-server cabling situation was resolved."

On Labor Day morning, the servers were being powered up with the correct cables, Bray explained. The FCC had to make up for the time it lost and stay focused, as by that point the team had been working long shifts around the clock. Despite any fatigue that may have been setting in, the next day, the two most important systems at FCC, its electronic commenting filing system and its public electronic documents (i.e. public licenses, etc.), came online, with additional applications following.

"It was important that we test things first internally before we exposed the applications to the public again, because part of the value of this exercise was in essentially untangling 15 to 20 years of history at the FCC," said Bray. "In doing 'Operation Server Lift' we encountered many legacy shortcuts along the way within our applications that needed to be worked out. For example, maybe 10 or 12 years ago as a quick, temporary fix someone cross-connected this server with that server and didn’t think to tell anybody. And as a consequence, now that we've made the move to a commercial service provider a decade later, this undocumented quick fix is not working and it's not immediately clear why. The team was really good at embodying creative problem solving throughout the entire endeavor."

Cabling issues aside, by 8 a.m. Sept. 10, all of FCC’s external applications were available, and a day later “Operation Server Lift” was completed. In all, 200 servers, 400 applications, 400 TB of data had been physically moved – all within the limitations of a constrained budget – from FCC’s two D.C. data centers to a commercial service provider that would now look after them as FCC continued its push to 100 percent public cloud.

“As a result of the move, we reduced our rack count from 90 to less than 72,” said Bray. “The ultimate goal is in six months we'll go down even further, to 60. The benefits of a commercial service provider is when we retire a rack, we’ll see savings on the spot. The plan is FCC’s rack count will continue to go down over the next two years to, ideally, zero. When the rack count is at zero, it means that everything’s in the cloud.”

Bray continued, “Also, once we moved everything off site, we no longer had to pay for the cost per square foot in D.C. real estate for storing our servers, which you can imagine is not the cheapest. Basically, it made it so that we can be more much more agile going forward in terms of moving to the cloud with everything.”

It is a testimony to the FCC’s planning and backup plans that a cabling issue was the only hiccup. And the 55 hours that went into fixing it was well worth it: "Operation Server Lift" reduced the FCC’s on-going maintenance costs from more than 85 percent of their IT budget to now less than 50 percent of their budget. This shift frees up funds that FCC can now use to move the remaining legacy applications to cloud platforms.

Bray also touched on another benefit of the move. “In some respects, this forced an accounting of all the systems and applications we had, so that we could make conscious decisions about which servers to retire, how they are all connected, what applications we had and didn't have, etc. We knew that if the move was to be successful, we really needed to understand what we had, inside and out.”

"For a lot of CIOs, taking on a new role is like buying a used car sight unseen, driving it off the lot, and only then getting a chance to look at the engine," Bray continued. "As a result of 'Operation Server Lift,' it’s now not just me but our entire team that actually truly understands the engine we have. We are all keenly aware of things we need to focus on first – either because we’ll get the biggest bang for the buck by doing it, or it’s just so important that if we don’t address it something’s going to break in the next year or two. Ideally, the funds we’ve now 'freed up' as a result of this massive operation will allow us move with speed the remaining elements of FCC to public cloud platforms."

For a behind the scenes look at “Operation Server Lift,” check out these videos.