Running a few standalone containers for development purposes won’t rob your IT team of time or patience: A standards-based container runtime by itself will do the job. But once you scale to a production environment and multiple applications spanning many containers, it’s clear that you need a way to coordinate those containers to deliver the individual services. As containers accumulate, complexity grows. Eventually, you need to take a step back and group containers along with the coordinated services they need, such as networking, security, and telemetry.

That’s why technologies like the open source Kubernetes project are such a big part of the container scene.

Kubernetes automates and orchestrates Linux container operations. It eliminates many of the manual processes involved in deploying and scaling containerized applications. In other words, you can cluster together groups of hosts running Linux containers, and Kubernetes helps you easily and efficiently manage those clusters. These clusters can span hosts across public, private, and hybrid clouds. (Although for performance and other reasons, it’s often recommended that individual clusters should be limited to a single physical location.)

[ Want CIO wisdom on hybrid cloud and multi-cloud strategy? See our related resource, Hybrid Cloud: The IT leader's guide. ]

Let’s take a deeper look at how enterprise IT is using Kubernetes to tame container complexity — and automate routine tasks — freeing IT time for other work.

What’s a Kubernetes pod?

Kubernetes fixes a lot of common problems with container proliferation by structuring containers together into a “pod.” Pods add a layer of abstraction to grouped containers, which helps you schedule workloads and provide necessary services – like networking and storage – to those containers. Kubernetes also helps you load balance across these pods and ensure you have the correct number of containers, connected together in the right way, to reliably support your workloads.

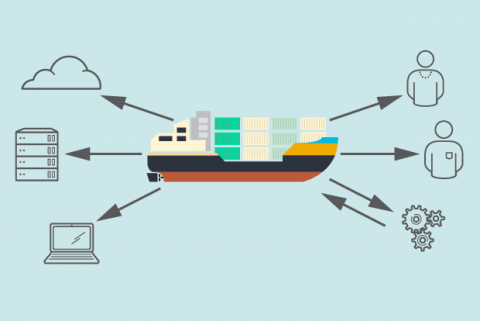

With Kubernetes – and with the help of other open source projects like Atomic Registry, flannel, heapster, OAuth, and SELinux – you can orchestrate all parts of your container infrastructure.

The standardization of containers through the Open Container Initiative (OCI) makes it easier to develop complementary technologies like Kubernetes that make containers more useful in production settings. For example, in addition to orchestrating, scaling, and maintaining the health of apps across distributed environments, Kubernetes can mount and add storage as required to provide persistent access to a specific set of data. Ongoing work in projects like CRI-O aim to further reduce any dependencies between specific container implementations and Kubernetes.

Kubernetes is also attuned to modern service deployment practices such as using of blue-green deployments to introduce and test new features without affecting users. (A blue-green deployment maintains two production environments, as identical as possible. At any time, one of them is live – say, the blue. As you prepare a new release of your software, you do your final stage of testing in the green environment.)

Kubernetes relies on additional projects to provide the services developers and operators might choose to deploy and run cloud-native applications in production. These pieces include:

- A container registry, through projects like Atomic Registry. Consider it the application store.

- Networking, through projects like flannel, calico, or weave. Collaborating containers, or microservices, need networking that also needs to be effectively contained so they can communicate within their namespace with other containers.

- Telemetry, through projects such as heapster, kibana, and elasticsearch. Highly automated systems need logging and good analytics on running applications and their containers.

- Security, through projects like LDAP, SELinux, and OAUTH. Containers and their assets need to be secure and contained.

Control over containers

From an infrastructure perspective, Kubernetes doesn’t change the fundamental mechanisms of container management. But control over containers now happens at a higher level, providing better control without the need to micromanage each individual container or node.

I’ve discussed just some of the key components that you need to effectively run containers in production. There can be many of them. Complete container application platforms, such as Red Hat OpenShift, eliminate the need to assemble those pieces yourself.

For more on how your IT leadership peers are using Kubernetes, see our related article, Kubernetes by the numbers: 10 compelling stats.

[ Read also: Containers aren't just for applications, by Gordon Haff. ]

Comments

Excellent and very clear article. Thank you Gordon.